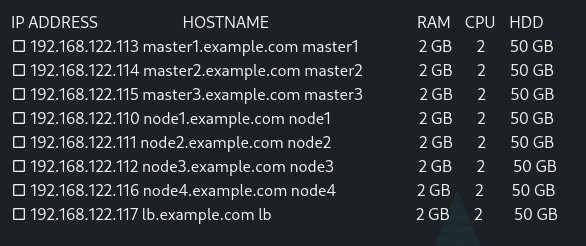

Kubernetes is an open source container orchestration engine. It provides basic mechanisms for deployment, maintenance, and scaling of applications. Kubernetes is hosted by the Cloud Native Computing Foundation (CNCF). In this tutorial, we will Install and configure a multi-master Kubernetes cluster with kubeadm on Centos 7 / RHEL from scratch. It will have 3 master nodes, 4 worker nodes with a haproxy load balancer

Lab Setup for multi master Kubernetes

Prepare the nodes for Kubernetes Cluster

- Configure IP Address

- Configure Hostname

- Stop and disable Firewall

- Update the System

- Reboot the system

Configure hosts file to resolve hostnames on all the nodes

[root@master1 ~]# vim /etc/hosts

92.168.122.113 master1.example.com master1

192.168.122.114 master2.example.com master2

192.168.122.115 master3.example.com master3

192.168.122.110 node1.example.com node1

192.168.122.111 node2.example.com node2

192.168.122.112 node3.example.com node3

192.168.122.116 node4.example.com node4

192.168.122.117 lb.example.com lb

Install Docker

We will install docker and enable it on all the nodes except loadbalancer

[root@master1 ~]#yum install -y docker

[root@master1 ~]#systemctl start docker && systemctl enable docker

[root@master1 ~]# systemctl status docker

[root@master1 ~]# docker version

Client:

Version: 1.13.1

API version: 1.26

Package version: docker-1.13.1-208.git7d71120.el7_9.x86_64

Go version: go1.10.3

Git commit: 7d71120/1.13.1

Built: Mon Jun 7 15:36:09 2021

OS/Arch: linux/amd64

Server:

Version: 1.13.1

API version: 1.26 (minimum version 1.12)

Package version: docker-1.13.1-208.git7d71120.el7_9.x86_64

Go version: go1.10.3

Git commit: 7d71120/1.13.1

Built: Mon Jun 7 15:36:09 2021

OS/Arch: linux/amd64

Experimental: falseSetting cgroups to systemd

[root@master1]#cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

EOF

[root@master1]#mkdir -p /etc/systemd/system/docker.service.d

[root@master1]#vim /etc/systemd/system/docker.service.d/docker.conf

[Service]

ExecStart=

ExecStart=/usr/bin/dockerd

[root@master1]#systemctl daemon-reload

[root@master1]#systemctl restart dockerStop and Disable the Swap Partition

Stop and disable the swap partition on all the nodes except loadbalancer

[root@master1 ~]#swapoff -a

[root@master1 ~]# sed -i 's/.*swap.*/#&/' /etc/fstab

[root@master1 ~]# swapon -s

[root@master1 ~]# cat /etc/fstab | grep -i swapChange SELinux Mode to Permissive

Change selinux mode to permissive on all the nodes

[root@master1 ~]# setenforce 0

[root@master1 ~]# sed -i --follow-symlinks "s/^SELINUX=enforcing/SELINUX=permissive/g" /etc/sysconfig/selinuxConfigure Network Bridge Setting and Enable IPv4 Forwarding on all the Nodes.

[root@master1 ~]# vim /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness=0[root@master1 ~]# sysctl --system

* Applying /usr/lib/sysctl.d/00-system.conf ...

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

* Applying /usr/lib/sysctl.d/10-default-yama-scope.conf ...

kernel.yama.ptrace_scope = 0

* Applying /usr/lib/sysctl.d/50-default.conf ...

kernel.sysrq = 16

kernel.core_uses_pid = 1

kernel.kptr_restrict = 1

net.ipv4.conf.default.rp_filter = 1

net.ipv4.conf.all.rp_filter = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.conf.all.accept_source_route = 0

net.ipv4.conf.default.promote_secondaries = 1

net.ipv4.conf.all.promote_secondaries = 1

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /usr/lib/sysctl.d/99-containers.conf ...

fs.may_detach_mounts = 1

* Applying /usr/lib/sysctl.d/99-docker.conf ...

fs.may_detach_mounts = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /etc/sysctl.d/k8s.conf ...

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

vm.swappiness = 0

* Applying /etc/sysctl.conf ...Install and Configure HAProxy

Install and configure HaProxy on the loadbalancer

[root@lb ~]# yum install haproxy openssl-devel -yAdd below lines into the haproxy.cfg file

[root@lb ~]# vim /etc/haproxy/haproxy.cfg

global

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

stats socket /var/lib/haproxy/stats

defaults

mode http

log global

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

frontend kubernetes

mode tcp

bind *:6443

option tcplog

default_backend kubernetes-master-nodes

backend kubernetes-master-nodes

mode tcp

balance roundrobin

option tcp-check

server master1 192.168.122.113:6443 check fall 3 rise 2

server master2 192.168.122.114:6443 check fall 3 rise 2

server master3 192.168.122.115:6443 check fall 3 rise 2Start and Enable haproxy service

[root@lb ~]# systemctl start haproxy

[root@lb ~]# systemctl enable haproxy

[root@lb ~]# systemctl status haproxy[root@lb ~]# setenforce 0

[root@lb ~]# sed -i --follow-symlinks "s/^SELINUX=enforcing/SELINUX=permissive/g" /etc/sysconfig/selinux[root@lb ~]# echo "net.ipv4.ip_nonlocal_bind=1" >> /etc/sysctl.conf

[root@lb ~]# cat /etc/sysctl.conf

[root@lb ~]# sysctl -p[root@lb ~]# nc -zv localhost 6443

Ncat: Version 7.50 ( https://nmap.org/ncat )

Ncat: Connection to ::1 failed: Connection refused.

Ncat: Trying next address...

Ncat: Connected to 127.0.0.1:6443.

Ncat: 0 bytes sent, 0 bytes received in 0.01 seconds.Install and Configure Cloud Flare Certificate Authority on Loadbalancer

[root@lb ~]# yum install wget -y

[root@lb ~]# wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

[root@lb ~]# wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64Once downloaded copy the binaries to /usr/local/bin and give executable permission

[root@lb ~]# cp cfssl_linux-amd64 /usr/local/bin/cfssl

[root@lb ~]# cp cfssljson_linux-amd64 /usr/local/bin/cfssljson

[root@lb ~]# chmod +x /usr/local/bin/cfssl*[root@lb ~]# ls -l /usr/local/bin/cfssl*

-rwxr-xr-x 1 root root 10376657 Mar 3 20:04 /usr/local/bin/cfssl

-rwxr-xr-x 1 root root 2277873 Mar 3 20:05 /usr/local/bin/cfssljsonCheck the cfssl version

[root@lb ~]# cfssl version

Version: 1.2.0

Revision: dev

Runtime: go1.6Create the CA configuration files

[root@lb ~]# mkdir kube-ca && cd kube-ca

[root@lb kube-ca]# vim ca-config.json

{

"signing": {

"default": {

"expiry": "8760h"

},

"profiles": {

"kubernetes": {

"usages": ["signing", "key encipherment", "server auth", "client auth"],

"expiry": "8760h"

}

}

}

}Create the csr request configuration file

[root@lb kube-ca]# vim ca-csr.json

{

"CN": "Kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "IN",

"L": "Mumbai",

"O": "k8s",

"OU": "Makeuseoflinux",

"ST": "Mumbai"

}

]

}Generate the CA certificate and private key

[root@lb kube-ca]# cfssl gencert -initca ca-csr.json | cfssljson -bare caCreating certificate for the Etcd cluster

[root@lb kube-ca]# vim kubernetes-csr.json

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "IN",

"L": "Mumbai",

"O": "k8s",

"OU": "Makeuseoflinux",

"ST": "Mumbai"

}

]

}

Generate certificate and private key for etcd cluster

[root@lb kube-ca]# cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json \ -hostname=192.168.122.113,master1.example.com,192.168.122.114,master2.example.com,192.168.122.115,master3.example.com,192.168.122.117,lb.example.com,127.0.0.1,kubernetes.default \

-profile=kubernetes kubernetes-csr.json | cfssljson -bare kubernetes[root@lb kube-ca]# ls -l

total 40

-rw-r--r-- 1 root root 232 Mar 4 12:08 ca-config.json

-rw-r--r-- 1 root root 1001 Mar 4 12:10 ca.csr

-rw-r--r-- 1 root root 194 Mar 4 12:10 ca-csr.json

-rw------- 1 root root 1675 Mar 4 12:10 ca-key.pem

-rw-r--r-- 1 root root 1359 Mar 4 12:10 ca.pem

-rw-r--r-- 1 root root 1001 Mar 4 12:13 kubernetes.csr

-rw-r--r-- 1 root root 193 Mar 4 12:11 kubernetes-csr.json

-rw------- 1 root root 1675 Mar 4 12:13 kubernetes-key.pem

-rw-r--r-- 1 root root 1590 Mar 4 12:13 kubernetes.pem

Copy “ca.pem” “kubernetes.pem” “kubernetes-key.pem” certificate on all the Kubernetes nodes

[root@lb kube-ca]# scp ca.pem kubernetes.pem kubernetes-key.pem [email protected]:~/

[root@lb kube-ca]# scp ca.pem kubernetes.pem kubernetes-key.pem [email protected]:~/

[root@lb kube-ca]# scp ca.pem kubernetes.pem kubernetes-key.pem [email protected]:~/

[root@lb kube-ca]# scp ca.pem kubernetes.pem kubernetes-key.pem [email protected]:~/

[root@lb kube-ca]# scp ca.pem kubernetes.pem kubernetes-key.pem [email protected]:~/

[root@lb kube-ca]# scp ca.pem kubernetes.pem kubernetes-key.pem [email protected]:~/

[root@lb kube-ca]# scp ca.pem kubernetes.pem kubernetes-key.pem [email protected]:~/Configure YUM Repository to Install Kubernetes 1.23 Cluster Packages on all Nodes except loadbalancer

[root@master1 ~]#vim /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg

https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg Install Kubernetes Packages on all Kubernetes nodes

[root@master1 ~]# yum install -y kubelet kubeadm kubectl wget

[root@master1 ~]# systemctl enable kubelet && systemctl start kubeletInstall and configure Etcd on master1

[root@master1 ~]# mkdir /etc/etcd /var/lib/etcdMove the certificates to the configuration /etc/etcd/ directory

[root@master1 ~]# mv ~/ca.pem ~/kubernetes.pem ~/kubernetes-key.pem /etc/etcd/

[root@master1 ~]# chmod -R a+r /etc/etcd/Download etcd binaries

[root@master1 ~]# wget https://github.com/coreos/etcd/releases/download/v3.3.9/etcd-v3.3.9-linux-amd64.tar.gzExtract etcd binaries and move to /usr/local/bin directory

[root@master1 ~]# tar xvzf etcd-v3.3.9-linux-amd64.tar.gz

[root@master1 ~]# mv etcd-v3.3.9-linux-amd64/etcd* /usr/local/bin/Create an etcd systemd unit file

[root@master1 ~]# vim /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \

--name 192.168.122.113 \

--cert-file=/etc/etcd/kubernetes.pem \

--key-file=/etc/etcd/kubernetes-key.pem \

--peer-cert-file=/etc/etcd/kubernetes.pem \

--peer-key-file=/etc/etcd/kubernetes-key.pem \

--trusted-ca-file=/etc/etcd/ca.pem \

--peer-trusted-ca-file=/etc/etcd/ca.pem \

--peer-client-cert-auth \

--client-cert-auth \

--initial-advertise-peer-urls https://192.168.122.113:2380 \

--listen-peer-urls https://192.168.122.113:2380 \

--listen-client-urls https://192.168.122.113:2379,https://127.0.0.1:2379 \

--advertise-client-urls https://192.168.122.113:2379 \

--initial-cluster-token etcd-cluster-0 \

--initial-cluster 192.168.122.113=https://192.168.122.113:2380,192.168.122.114=https://192.168.122.114:2380,192.168.122.115=https://192.168.122.115:2380 \

--initial-cluster-state new \

--data-dir=/var/lib/etcd/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.targetReload the daemon configuration, start and enable etcd and finally verify the status

[root@master1 ~]# systemctl daemon-reload

[root@master1 ~]# systemctl enable etcd

[root@master1 ~]# systemctl start etcd

[root@master1 ~]# systemctl status etcd

[root@master1 ~]# systemctl status etcd

● etcd.service - etcd

Loaded: loaded (/etc/systemd/system/etcd.service; disabled; vendor preset: disabled)

Active: active (running) since Fri 2022-03-04 14:11:24 IST; 5h 45min ago

Docs: https://github.com/coreos

Main PID: 1205 (etcd)

CGroup: /system.slice/etcd.service

└─1205 /usr/local/bin/etcd --name 192.168.122.113 --cert-file=/etc/etcd/kubernetes.pem --key-file=/etc/etcd/kube...

Mar 04 19:35:59 master1.example.com etcd[1205]: store.index: compact 44890

Mar 04 19:35:59 master1.example.com etcd[1205]: finished scheduled compaction at 44890 (took 1.598551ms)

Mar 04 19:40:59 master1.example.com etcd[1205]: store.index: compact 45488

Mar 04 19:40:59 master1.example.com etcd[1205]: finished scheduled compaction at 45488 (took 1.0796ms)

Mar 04 19:45:59 master1.example.com etcd[1205]: store.index: compact 46088

Mar 04 19:45:59 master1.example.com etcd[1205]: finished scheduled compaction at 46088 (took 795.464µs)

Mar 04 19:50:59 master1.example.com etcd[1205]: store.index: compact 46686

Mar 04 19:50:59 master1.example.com etcd[1205]: finished scheduled compaction at 46686 (took 1.220982ms)

Mar 04 19:55:59 master1.example.com etcd[1205]: store.index: compact 47284

Mar 04 19:55:59 master1.example.com etcd[1205]: finished scheduled compaction at 47284 (took 1.977062ms)Installing and configuring Etcd on master2

[root@master2 ~]# mkdir /etc/etcd /var/lib/etcd

[root@master2 ~]# mv ~/ca.pem ~/kubernetes.pem ~/kubernetes-key.pem /etc/etcd/

[root@master2 ~]# chmod -R a+r /etc/etcd/Download etcd binaries

[root@master2 ~]# wget https://github.com/coreos/etcd/releases/download/v3.3.9/etcd-v3.3.9-linux-amd64.tar.gz

[root@master2 ~]# tar xvzf etcd-v3.3.9-linux-amd64.tar.gz

[root@master2 ~]# mv etcd-v3.3.9-linux-amd64/etcd* /usr/local/bin/Create an etcd systemd unit file

[root@master2 ~]# vim /etc/systemd/system/etcd.service

Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \

--name 192.168.122.114 \

--cert-file=/etc/etcd/kubernetes.pem \

--key-file=/etc/etcd/kubernetes-key.pem \

--peer-cert-file=/etc/etcd/kubernetes.pem \

--peer-key-file=/etc/etcd/kubernetes-key.pem \

--trusted-ca-file=/etc/etcd/ca.pem \

--peer-trusted-ca-file=/etc/etcd/ca.pem \

--peer-client-cert-auth \

--client-cert-auth \

--initial-advertise-peer-urls https://192.168.122.114:2380 \

--listen-peer-urls https://192.168.122.114:2380 \

--listen-client-urls https://192.168.122.114:2379,https://127.0.0.1:2379 \

--advertise-client-urls https://192.168.122.114:2379 \

--initial-cluster-token etcd-cluster-0 \

--initial-cluster 192.168.122.113=https://192.168.122.113:2380,192.168.122.114=https://192.168.122.114:2380,192.168.122.115=https://192.168.122.115:2380 \

--initial-cluster-state new \

--data-dir=/var/lib/etcd/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

Reload the daemon configuration, start and enable etcd service and verify the status

[root@master2 ~]# systemctl daemon-reload

[root@master2 ~]# systemctl enable etcd

[root@master2 ~]# systemctl start etcd

[root@master2 ~]# systemctl status etcd

● etcd.service - etcd

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2022-03-04 13:44:37 IST; 6h ago

Docs: https://github.com/coreos

Main PID: 1694 (etcd)

CGroup: /system.slice/etcd.service

└─1694 /usr/local/bin/etcd --name 192.168.122.114 --cert-file=/etc/etcd/kubernetes.pem --key-file=/etc/etcd/kube...

Mar 04 19:40:59 master2.example.com etcd[1694]: store.index: compact 45488

Mar 04 19:40:59 master2.example.com etcd[1694]: finished scheduled compaction at 45488 (took 1.645368ms)

Mar 04 19:45:59 master2.example.com etcd[1694]: store.index: compact 46088

Mar 04 19:45:59 master2.example.com etcd[1694]: finished scheduled compaction at 46088 (took 655.815µs)

Mar 04 19:50:59 master2.example.com etcd[1694]: store.index: compact 46686

Mar 04 19:50:59 master2.example.com etcd[1694]: finished scheduled compaction at 46686 (took 1.34232ms)

Mar 04 19:55:59 master2.example.com etcd[1694]: store.index: compact 47284

Mar 04 19:55:59 master2.example.com etcd[1694]: finished scheduled compaction at 47284 (took 1.620762ms)

Mar 04 20:00:59 master2.example.com etcd[1694]: store.index: compact 47887

Mar 04 20:00:59 master2.example.com etcd[1694]: finished scheduled compaction at 47887 (took 1.355101ms)

[root@master2 ~]#

Installing and configuring Etcd on master3

[root@master3 ~]# mkdir /etc/etcd /var/lib/etcd

[root@master3 ~]# mv ~/ca.pem ~/kubernetes.pem ~/kubernetes-key.pem /etc/etcd/Download the etcd binaries

[root@master3 ~]# wget https://github.com/coreos/etcd/releases/download/v3.3.9/etcd-v3.3.9-linux-amd64.tar.gz

[root@master3 ~]# tar xvzf etcd-v3.3.9-linux-amd64.tar.gz

[root@master3 ~]# mv etcd-v3.3.9-linux-amd64/etcd* /usr/local/bin/Create an etcd systemd unit file

[root@master3 ~]# vim /etc/systemd/system/etcd.service

[Unit]

Description=etcd

Documentation=https://github.com/coreos

[Service]

ExecStart=/usr/local/bin/etcd \

--name 192.168.122.115 \

--cert-file=/etc/etcd/kubernetes.pem \

--key-file=/etc/etcd/kubernetes-key.pem \

--peer-cert-file=/etc/etcd/kubernetes.pem \

--peer-key-file=/etc/etcd/kubernetes-key.pem \

--trusted-ca-file=/etc/etcd/ca.pem \

--peer-trusted-ca-file=/etc/etcd/ca.pem \

--peer-client-cert-auth \

--client-cert-auth \

--initial-advertise-peer-urls https://192.168.122.115:2380 \

--listen-peer-urls https://192.168.122.115:2380 \

--listen-client-urls https://192.168.122.115:2379,https://127.0.0.1:2379 \

--advertise-client-urls https://192.168.122.115:2379 \

--initial-cluster-token etcd-cluster-0 \

--initial-cluster 192.168.122.113=https://192.168.122.113:2380,192.168.122.114=https://192.168.122.114:2380,192.168.122.115=https://192.168.122.115:2380 \

--initial-cluster-state new \

--data-dir=/var/lib/etcd/etcd

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.targetReload the daemon configuration, start and enable etcd service and verify the status

[root@master3 ~]# systemctl daemon-reload

[root@master3 ~]# systemctl enable etcd

[root@master3 ~]# systemctl start etcd

[root@master3 ~]# systemctl status etcd

[root@master3 ~]# systemctl status etcd

● etcd.service - etcd

Loaded: loaded (/etc/systemd/system/etcd.service; enabled; vendor preset: disabled)

Active: active (running) since Fri 2022-03-04 13:54:49 IST; 6h ago

Docs: https://github.com/coreos

Main PID: 13802 (etcd)

CGroup: /system.slice/etcd.service

└─13802 /usr/local/bin/etcd --name 192.168.122.115 --cert-file=/etc/etcd/kubernetes.pem --key-file=/etc/etcd/kub...

Mar 04 19:46:00 master3.example.com etcd[13802]: store.index: compact 46088

Mar 04 19:46:00 master3.example.com etcd[13802]: finished scheduled compaction at 46088 (took 833.614µs)

Mar 04 19:51:00 master3.example.com etcd[13802]: store.index: compact 46686

Mar 04 19:51:00 master3.example.com etcd[13802]: finished scheduled compaction at 46686 (took 1.174917ms)

Mar 04 19:56:00 master3.example.com etcd[13802]: store.index: compact 47284

Mar 04 19:56:00 master3.example.com etcd[13802]: finished scheduled compaction at 47284 (took 1.402167ms)

Mar 04 20:01:00 master3.example.com etcd[13802]: store.index: compact 47887

Mar 04 20:01:00 master3.example.com etcd[13802]: finished scheduled compaction at 47887 (took 1.498206ms)

Mar 04 20:06:00 master3.example.com etcd[13802]: store.index: compact 48484

Mar 04 20:06:00 master3.example.com etcd[13802]: finished scheduled compaction at 48484 (took 848.821µs)Verify that the etcd cluster is up and running from master node

[root@master1 ~]# etcdctl -C https://192.168.122.113:2379 --ca-file=/etc/etcd/ca.pem --cert-file=/etc/etcd/kubernetes.pem --key-file=/etc/etcd/kubernetes-key.pem cluster-health

member 1c3e7696f7178284 is healthy: got healthy result from https://192.168.122.114:2379

member a3dc04b2021efbca is healthy: got healthy result from https://192.168.122.115:2379

member b19c0c29b462db2f is healthy: got healthy result from https://192.168.122.113:2379

cluster is healthy

[root@master1 ~]# etcdctl -C https://192.168.122.113:2379 --ca-file=/etc/etcd/ca.pem --cert-file=/etc/etcd/kubernetes.pem --key-file=/etc/etcd/kubernetes-key.pem member list

1c3e7696f7178284: name=192.168.122.114 peerURLs=https://192.168.122.114:2380 clientURLs=https://192.168.122.114:2379 isLeader=true

a3dc04b2021efbca: name=192.168.122.115 peerURLs=https://192.168.122.115:2380 clientURLs=https://192.168.122.115:2379 isLeader=false

b19c0c29b462db2f: name=192.168.122.113 peerURLs=https://192.168.122.113:2380 clientURLs=https://192.168.122.113:2379 isLeader=falseInitialize Kubernetes Master Nodes

We will initialize Kubernetes master nodes starting with master1 node. Create the configuration file for kubeadm.

[root@master1 ~]# vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

kind: ClusterConfiguration

kubernetesVersion: stable

controlPlaneEndpoint: "lb.example.com:6443"

etcd:

external:

endpoints:

- https://192.168.122.113:2379

- https://192.168.122.114:2379

- https://192.168.122.115:2379

caFile: /etc/etcd/ca.pem

certFile: /etc/etcd/kubernetes.pem

keyFile: /etc/etcd/kubernetes-key.pemInitialize the machine as a Kubeadm master node. Also we can download the images before initializing the cluster

[root@master1 ~]# kubeadm config images pull

[config/images] Pulled k8s.gcr.io/kube-apiserver:v1.23.4

[config/images] Pulled k8s.gcr.io/kube-controller-manager:v1.23.4

[config/images] Pulled k8s.gcr.io/kube-scheduler:v1.23.4

[config/images] Pulled k8s.gcr.io/kube-proxy:v1.23.4

[config/images] Pulled k8s.gcr.io/pause:3.6

[config/images] Pulled k8s.gcr.io/etcd:3.5.1-0

[config/images] Pulled k8s.gcr.io/coredns/coredns:v1.8.6[root@master1 ~]# kubeadm init --config kubeadm-config.yaml --upload-certs Keep the out handy which is required to bootstrap/connect other master nodes as control plane and worker nodes to the cluster

[root@master1 ~]# mkdir -p $HOME/.kube

[root@master1 ~]# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@master1 ~]# sudo chown $(id -u):$(id -g) $HOME/.kube/configLets configure networking for kubeadm cluster by installing weave cni plugin

[root@master1 ~]# kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"You can select any other cni plugin be refering this link

Lets check the kube-system pods from master1

[root@master1 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-64897985d-mg8g5 1/1 Running 1 (12h ago) 20h

coredns-64897985d-wjhwp 1/1 Running 1 (12h ago) 20h

kube-apiserver-master1.example.com 1/1 Running 4 (12h ago) 20h

kube-controller-manager-master1.example.com 1/1 Running 4 (12h ago) 20h

kube-proxy-2b84t 1/1 Running 2 (12h ago) 20h

kube-scheduler-master1.example.com 1/1 Running 4 (12h ago) 20h

weave-net-26rz2 2/2 Running 6 (12h ago) 20h[root@master1 ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master1.example.com Ready control-plane,master 20h v1.23.4Lets bootstrap the master2 node. Copy the kubeadm join command provided on the master1 node output for adding additional control plane nodes to the cluster

[root@master2 ~]# kubeadm join lb.example.com:6443 --token p22h48.0z4t9sspe0lb0s89 --discovery-token-ca-cert-hash sha256:626eeed8173991f6790ca87ceb8377a3e1b7fb72a13c462e124b2bc1b9ec674a --control-plane --certificate-key f1cd5385bff185ee04ee1e3961e4aa966f24dcf2afa9c9b0ed025c3573af4983Also run the same kubeadm join command on master3

[root@master3 ~]# kubeadm join lb.example.com:6443 --token p22h48.0z4t9sspe0lb0s89 --discovery-token-ca-cert-hash sha256:626eeed8173991f6790ca87ceb8377a3e1b7fb72a13c462e124b2bc1b9ec674a --control-plane --certificate-key f1cd5385bff185ee04ee1e3961e4aa966f24dcf2afa9c9b0ed025c3573af4983[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.example.com Ready control-plane,master 20h v1.23.4

master2.example.com Ready control-plane,master 20h v1.23.4

master3.example.com Ready control-plane,master 20h v1.23.4[root@master1 ~]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-64897985d-mg8g5 1/1 Running 1 (12h ago) 20h

coredns-64897985d-wjhwp 1/1 Running 1 (12h ago) 20h

kube-apiserver-master1.example.com 1/1 Running 4 (12h ago) 20h

kube-apiserver-master2.example.com 1/1 Running 3 (12h ago) 20h

kube-apiserver-master3.example.com 1/1 Running 3 (12h ago) 20h

kube-controller-manager-master1.example.com 1/1 Running 4 (12h ago) 20h

kube-controller-manager-master2.example.com 1/1 Running 2 (12h ago) 20h

kube-controller-manager-master3.example.com 1/1 Running 3 (12h ago) 20h

kube-proxy-2b84t 1/1 Running 2 (12h ago) 20h

kube-proxy-4nxdj 1/1 Running 3 (12h ago) 20h

kube-proxy-6st5h 1/1 Running 2 (12h ago) 20h

kube-scheduler-master1.example.com 1/1 Running 4 (12h ago) 20h

kube-scheduler-master2.example.com 1/1 Running 2 (12h ago) 20h

kube-scheduler-master3.example.com 1/1 Running 3 (12h ago) 20h

weave-net-26rz2 2/2 Running 6 (12h ago) 20h

weave-net-5jhz8 2/2 Running 6 (12h ago) 20h

weave-net-8qc4z 2/2 Running 2 (12h ago) 20hInitialize the worker nodes

Lets initialize and bootstrap the worker nodes with kubeadm join command

[root@node1 ~]# kubeadm join lb.example.com:6443 --token p22h48.0z4t9sspe0lb0s89 --discovery-token-ca-cert-hash sha256:626eeed8173991f6790ca87ceb8377a3e1b7fb72a13c462e124b2bc1b9ec674a[root@node2 ~]# kubeadm join lb.example.com:6443 --token p22h48.0z4t9sspe0lb0s89 --discovery-token-ca-cert-hash sha256:626eeed8173991f6790ca87ceb8377a3e1b7fb72a13c462e124b2bc1b9ec674a[root@node3 ~]# kubeadm join lb.example.com:6443 --token p22h48.0z4t9sspe0lb0s89 --discovery-token-ca-cert-hash sha256:626eeed8173991f6790ca87ceb8377a3e1b7fb72a13c462e124b2bc1b9ec674a[root@node4 ~]# kubeadm join lb.example.com:6443 --token p22h48.0z4t9sspe0lb0s89 --discovery-token-ca-cert-hash sha256:626eeed8173991f6790ca87ceb8377a3e1b7fb72a13c462e124b2bc1b9ec674aList the nodes by running below command from any of the master server

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master1.example.com Ready control-plane,master 21h v1.23.4

master2.example.com Ready control-plane,master 20h v1.23.4

master3.example.com Ready control-plane,master 20h v1.23.4

node1.example.com Ready <none> 20h v1.23.4

node2.example.com Ready <none> 20h v1.23.4

node3.example.com Ready <none> 20h v1.23.4

node4.example.com Ready <none> 20h v1.23.4Lets create a nginx pod and verify cluster functionality

[root@master1 ~]# kubectl run nginx --image nginx

pod/nginx created[root@master1 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx 1/1 Running 0 4m17s 10.38.0.1 node1.example.com <none> <none>

[root@master1 ~]# curl 10.38.0.1

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

html { color-scheme: light dark; }

body { width: 35em; margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif; }

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>Conclusion

Hurrah, we have successfully install and configure a multi-master Kubernetes cluster with kubeadm on centos 7.x